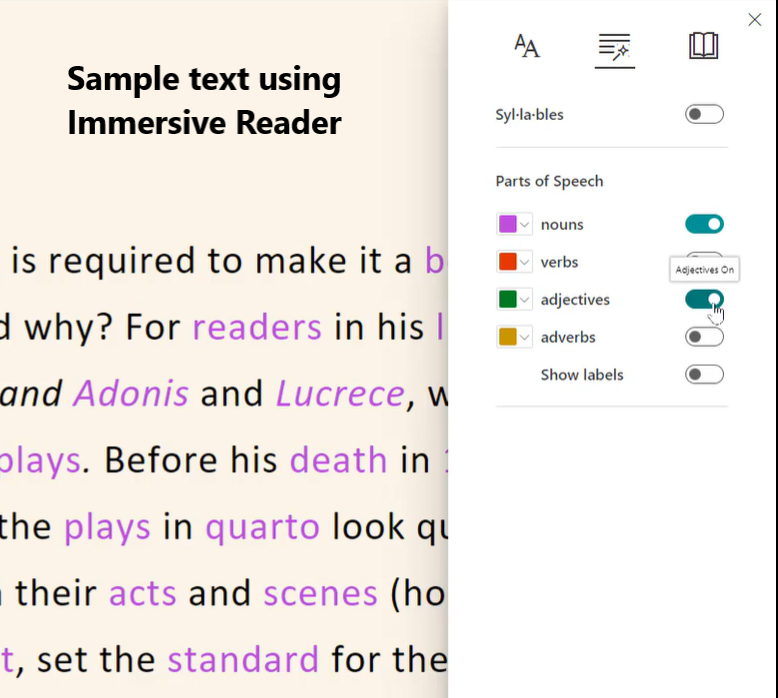

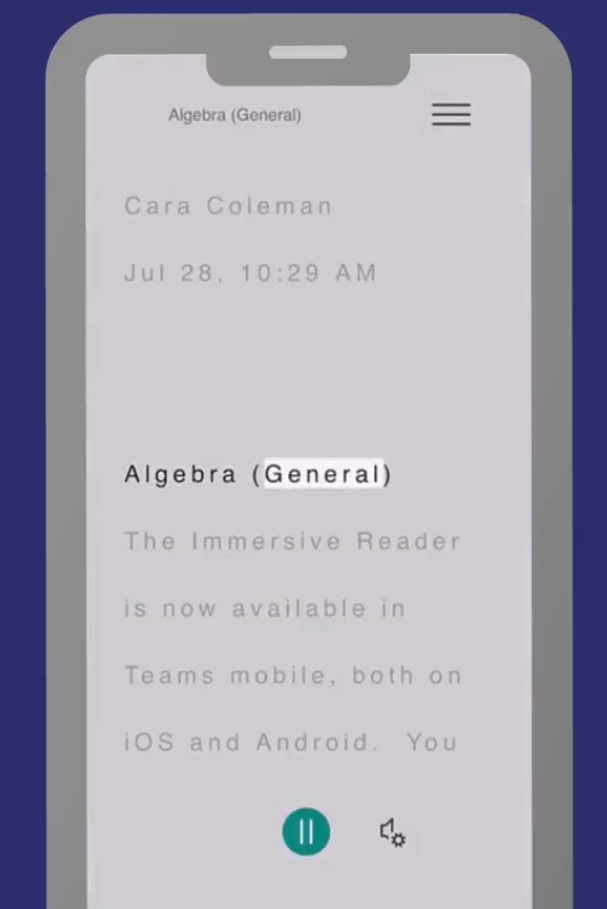

There are many ways that Immersive Reader can be used and LexDis already has stratgies for using this read aloud and text support app on mobile and as a set of immersive reading tools with OneNote on Microsoft 365.

However, recently Ros Walker sent an email to the JISC Assistive technology list about some updates that have occurred. One important point was her note about the app working with virtual learning environments such as Blackboard Ally alternative formats and it is now possible to create in Moodle, an ‘Immersive reader’ option as an alternative format for most files that are added into a Moodle course.

The student’s view on the Moodle course will allow them to select the A (ally logo) at the end of the title of the file they want as well as being presented with all the accessibility options. The University of Plymouth have provided guidance illustrating how this happens from the staff and student perspective as well as accessibility checks.

Introduction to Ally and Immersive Reader for Moodle

Ros has also been kind enough to link to her video about Immersive Reader in Word and how she has worked with PDFs to make the outcome a really useful strategy for students looking for different ways to read documents.

“If you haven’t seen the Immersive reader before, it is available in most Microsoft software and opens readings in a new window that is very clean and you can read the text aloud. (The Immersive Reader)”

Thanks to Ros Walker, University of St. Andrews

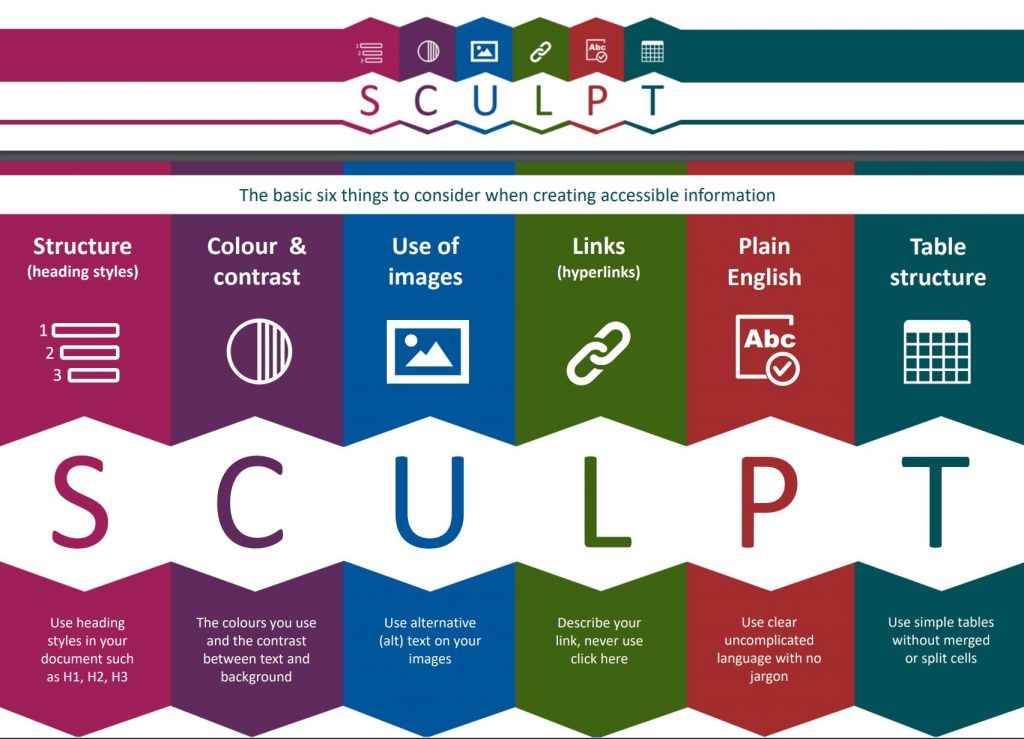

If you want to learn about digital accessibility in a fun way try the

If you want to learn about digital accessibility in a fun way try the