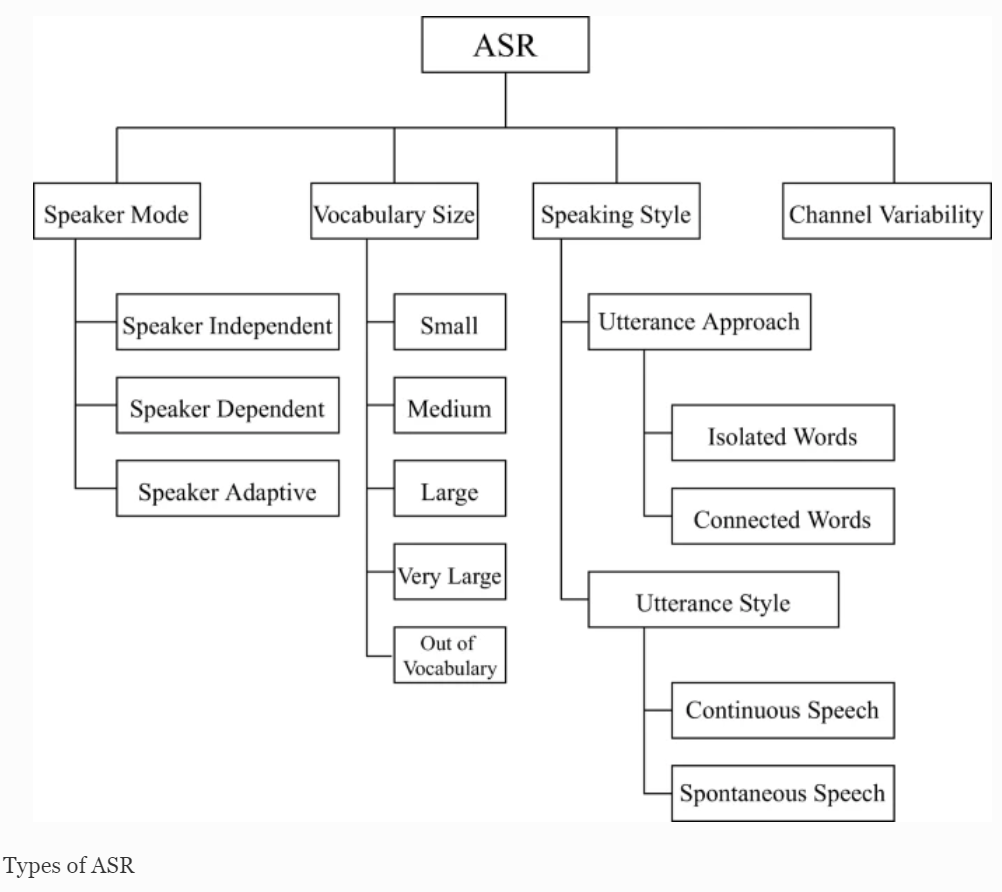

This blog is about the interesting idea that when you have biases that affect the accuracy results of a transcription made with automatic speech recognition (ASR) you might consider using voice cloning to gather different speech types that could improve the large language model that is used for the speech to text output. Voice cloning uses artificial intelligence (AI) with the aim of creating a similar voice to one provided by a speaker emulating articulation and intonation patterns. It is not the same as speech synthesis where a user can choose from a limited number of voices to provide text to speech. In theory once you have cloned your voice, it is possible to fine-tune it to work with any language as well as altering pitch and pace for different emotions. I might sound a bit robotic at times and lacking in emotion when you expect some excited sounding exclamations but at other times it can be alarmingly accurate!

However, as we have discussed in a past blog about evaluating ASR output, when it comes to transcriptions, errors may occur due to the way a person enunciates their words and the quality of the voice. If English is the language being used various accents, dialects, aging voices both male and female, lack of clarity and speed of speaking can result in an increase in Word Error Rates. Pronunciation may also be affected by imperfect knowledge about how words are said, whether that is due to their complexity or the speaker is working in English that is not their first language.

Could AI help with a series of voices that have been cloned from the very types of speech that cause problems for automated transcriptions? We have been experimenting and there are several issues to consider. The first issue arises when you discover the company selling the cloned voices is situated in the United States and when you try your British English with limited training of the model your voice develops an American accent or in the case of another company a uniquely European type of accent that has a hint of German because the company is based in Berlin. These voices can be tweaked and would probably improve if several hours of training occurred.

This leads to the second issue that is the amount of dictating that has to occur to improve results. – Tests with 30 minutes improved the quality but that did not solve some problems especially when working with STEM subjects where complex words may have been incorrectly pronounced during the training or did not match that expected in previously undertaken training by the voice cloning company. So unless a word has been pronounced with an American accent the system tends to produce an error or changes the original pronunciation to one accepted in the United States but not the United Kingdom!

Finally, there is always a cost involved when training is needed for longer periods and as a lecture is usually around 45 minutes long it is important for any experimentation to capture a speaker for at least this period in order to cater for the changes that can occur in speech over time. For example, tiredness or even poor use of recording devices can occur changing the quality of output. It has also been found that the cloned voice may not be perfectly replicated if you only have short training periods of a few minutes. But, 100 sentences of general dictation, where all the words can be accurately read, can produce a good result with clear speech for a transcription. In the case of a basic account with Resemble.ai transcripts have to be divided into 3000 character sections which means you need on average at least 11 sections of cloned voice for a whole lecture. Misspoken words can be changed and using the phonetic alphabet allows for adaptations to particular parts of words, emotion can be added but it would take time and there are several language options for different accents.