Eum

Age: 21 years

Course: Computer Science 1st Year

Hobbies: coding, cooking and travelling

Background:

Eum developed glaucoma meaning that as his peripheral vision has deteriorated and his central vision has become foggy, he spends more time zooming in to read text rather than zooming out. He has had to depend more and more on assistive technologies to help with his computer science studies, having changed his plans that were to become a chef. Eum loves cooking, but the stress of a restaurant kitchen coping with lots of unexpected obstacles became too challenging. As time has passed he also found he does not always recognise faces or signs in the distance. He tends to trip up on curbs or steps, especially when it gets dark or there is low lighting, however, this has not stopped his love of exploring new places when on holiday with family.

Reading can be difficult without careful targeted magnification and he tends to set up his two computer screens with good contrast levels for black on white text or other high colour contrast options, whilst trying to reduce reflections from windows and lights. At times he finds it easier to move the text in front of his eyes rather than scanning across text because of his reduced field of vision and the depth of focus on individual sections of text needed. Time is often an issue as assignments tend to take longer to complete when reading only a few letters in one glance. Eum uses a magnification cum screen reading program on a Windows machine, so that he can access various arrow heads, crosshair and line cursor sizes with focus tracking. The latter means that items are magnified as he moves across and down the screen. A high visibility keyboard helps, as does his portable magnification stand for reading paper-based materials.

Although Eum uses audio books on his tablet for listening to novels, he prefers to read academic papers and notes on his computer, where he can add annotations. Messaging and emails using his smart phone are quicker with speech recognition and text to speech output, although Eum does not depend totally on the screen reader technology for navigation. He has learnt over time where items are to be found and is very disciplined about how he personalises his desktop and filing system on all his devices. As a computer scientist in the making, Eum has become adept at changing browser settings with ad blockers and other extensions, but this does not compensate for the clutter he finds on many web sites. He becomes frustrated when developers fail to realise the importance of avoiding overlaps and disappearing content when sites are magnified. He feels scrolling horizontally should not be necessary on websites especially when form filling, as this is a particular challenge with text fields or modal windows that go missing or where there is no logical order to the layout.

Main Strategies to overcome Barriers to Access

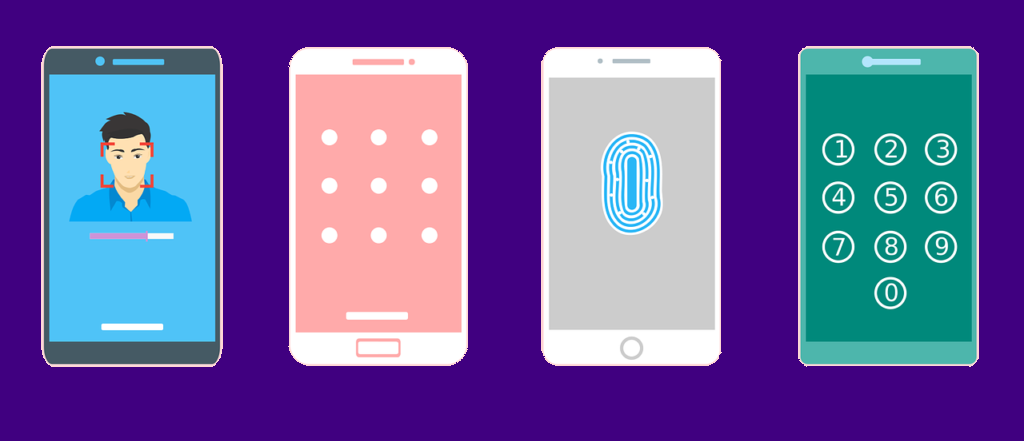

Multifactor authentication for password verification. Eum usually manages the initial password and use of mobile authenticator apps, SMS or knowledge-based challenge questions better than the grid style image or Captcha options. Time can be Eum’s real problem if he has to cope with different types of authentication where items disappear before he has had a chance to memorise or note down the code. He cannot use retina-scan technology, but finger and speech recognition work well. (Web Content Accessibility Guidelines (WCAG) 2.2 Success Criterion 3.3.7 Accessible Authentication)

Keyboard Access for navigation helps Eum as he has memorised many short cuts when surfing the web and finds it easier than mouse use when he has to track the arrow head or cursor. He depends on web pages appearing and operating in predictable ways (W3C WCAG Predictable: Understanding Guideline 3.2).

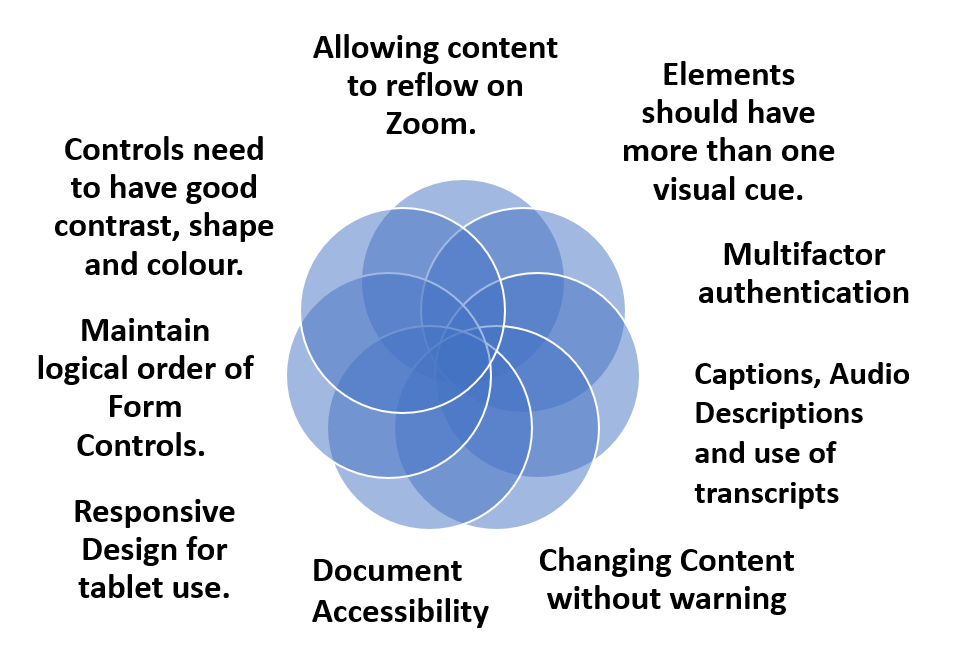

Allowing content to reflow on Zoom. Eum reads magnified content using large fonts that need mean a page has to reflow vertically (as a column) and remain fully legible and logical without the need for horizontal scrolling (W3C WCAG 1.4.4 Resize text (Level AA)). User agents that satisfy UAAG 1.0 Checkpoint 4.1 allow users to configure text scale.

Maintain logical order of Form Controls. Eum benefits from the logical order of form controls with labels close to the fields to which they relate so that the flow is vertical on magnification rather than horizontal. Dividing forms into small sections when they are long or complex with clear headings really helps. However, careful checks need to be made with borders of buttons etc so that they do not overlap other controls or disappear from the screen with high levels of magnification.

Controls need to have good contrast, shape and colour. Text needs to be distinct. The importance of contrast levels for all aspects of a service cannot be stressed too highly and yet it is often one of the items that fails in accessibility checks. Webaim provide clear guidance Understanding WCAG 2 Contrast and Color Requirements. It is also vital that text and images of text are distinct from their surroundings – WCAG SC 1.4.3 (Contrast – Minimum)

Elements should have more than one visual cue. Eum finds it really helps when he is selecting features on a web page to have icons, colour as well as text such as when there is an alert. Underlined text should just be used for hyperlinks. Links and other actionable elements must be clearly distinguishable.

Changing Content without warning. Because Eum uses assistive technology, whether it is his magnification software or speech recognition, he finds that any visual changes that occur to the interface or actions that happen without the page refreshing can cause problems. He needs to be notified about the changes W3C WCAG 2.0 Name, Role, Value: Understanding SC 4.1.2 and there is also the need to Understand Success Criterion 4.1.3: Status Messages.

Responsive Design for tablet use. Eum likes to use his tablet, but has to depend on its built-in access technologies and the responsive design for accessibility provided by the developers of web services as well as their customisable options. He relies on a consistent layout throughout a web service with sufficient space between interactive items such as buttons. A11y project mobile and touch checklist for accessibility.

Captions, Audio Descriptions and use of transcripts. Videos can be very tiring to watch and so Eum uses captions with high contrast colours to make them stand out more against the background of the video images, using highlighter systems to mark key points in the transcripts and depends on audio descriptions if scenes are not well described in the commentary on the video.

Document accessibility whether online or as a PDF download or other format, Eum needs to make changes to suit his narrow field of vision. WCAG Understanding Success Criterion 1.4.8: Visual Presentation has some useful guidance. WebAim also have an easy to follow set of instructions for making ‘Accessible Documents: Word, PowerPoint, & Acrobat’.

Key points from Eum

“Allow for magnification with zooming in to read text in small chunks, taking care to make visual presentation as logical as possible for vertical scrolling preferably in one column layout”

There is a useful document called “Accessibility Requirements for People with Low Vision” created as a W3C Editor’s Draft (04 November 2021)